Using Python and SQL to Map Wikipedia by Page Category

Hypothesis

I hypothesize that the links between the categories of the webpages in wikipedia will show the connections between various categories and may reveal origins of fields that may be unintuitive.

The complete code can be found on github here.

Method

To solve this problem I separate the problem into 2. To begin, I create a web-scraper that starts at either a page from a sqlite database, or begins at the wikipedia homepage. The home page has a variation of different links that change every day so it is a good place to start to ensure a variation of wikipedia pages of different categories. The web-scraper iterates through the links on a wikipedia page and stores them in a sqlite database.

Once it has stored all the links in a given page, it moves to the next page in the database. Obviously this can go on ad infinitum so the pages are limited (to say 60) at a time, so we don't anger the internet service provider. The ultimate goal would be a databse of all the links in wikipedia.

Python Webscraping Script

See here ▼

import sqlite3

from lxml import html

import requests

# Open connection

conn = sqlite3.connect('wikiLinks.sqlite')

cur = conn.cursor()

cur.executescript('''

/*

Uncomment below to reset tables

*/

/*

DROP TABLE IF EXISTS Links;

DROP TABLE IF EXISTS Category;

CREATE TABLE Links (

id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,

link TEXT UNIQUE,

parent INTEGER,

topic INTEGER

);

CREATE TABLE Category (

id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,

topic TEXT UNIQUE

)

*/

''')

# Helper function to grab all links from the url tree and insert into database

def get_urls(url_tree, parent_id):

for child in url_tree.iter():

try:

if child.tag == 'a':

# print(child.get('href'))

cur.execute('''INSERT OR IGNORE INTO Links (link, parent)

VALUES ( ?, ? )''', ( child.get('href'), parent_id) )

except: continue

return None

# Initialise at the main page since it changes everyday

init_url = 'https://en.wikipedia.org/wiki/Main_Page'

pages = 20 # get all links from this number of pages

cur.execute('SELECT COUNT(link) FROM Links ')

init = int(round(cur.fetchone()[0] / 100.)) # start from database length/100 so we dont repeat

for i in range(pages):

# grab id from inital link

cur.execute('SELECT link FROM Links WHERE id = ? ', (init + i, ))

try: url = cur.fetchone()[0]

except: url = None

if url:

cur.execute('SELECT id FROM Links WHERE link = ? ', (init_url, ))

parenturl_id = cur.fetchone()[0]

try:

page = requests.get('https://en.wikipedia.org' + url)

tree = html.fromstring(page.content)

# all the links in the main content in the class 'mw-content-ltr'

tree_body = tree.find_class('mw-content-ltr')

tree_body=tree_body[0] # in list type for some reason

t1 = get_urls(tree_body, parenturl_id) # grab the urls from the tree

except: continue

conn.commit()

Next, the categories of the links are plotted in a network. A python script goes through a given amount of the wikipedia links from the sqlite database and returns their category. The script also goes to the link associated with the category and continues, i.e. 'electromagnetism -> subfields of physics -> physics -> physical sciences -> natural sciences -> nature ...'

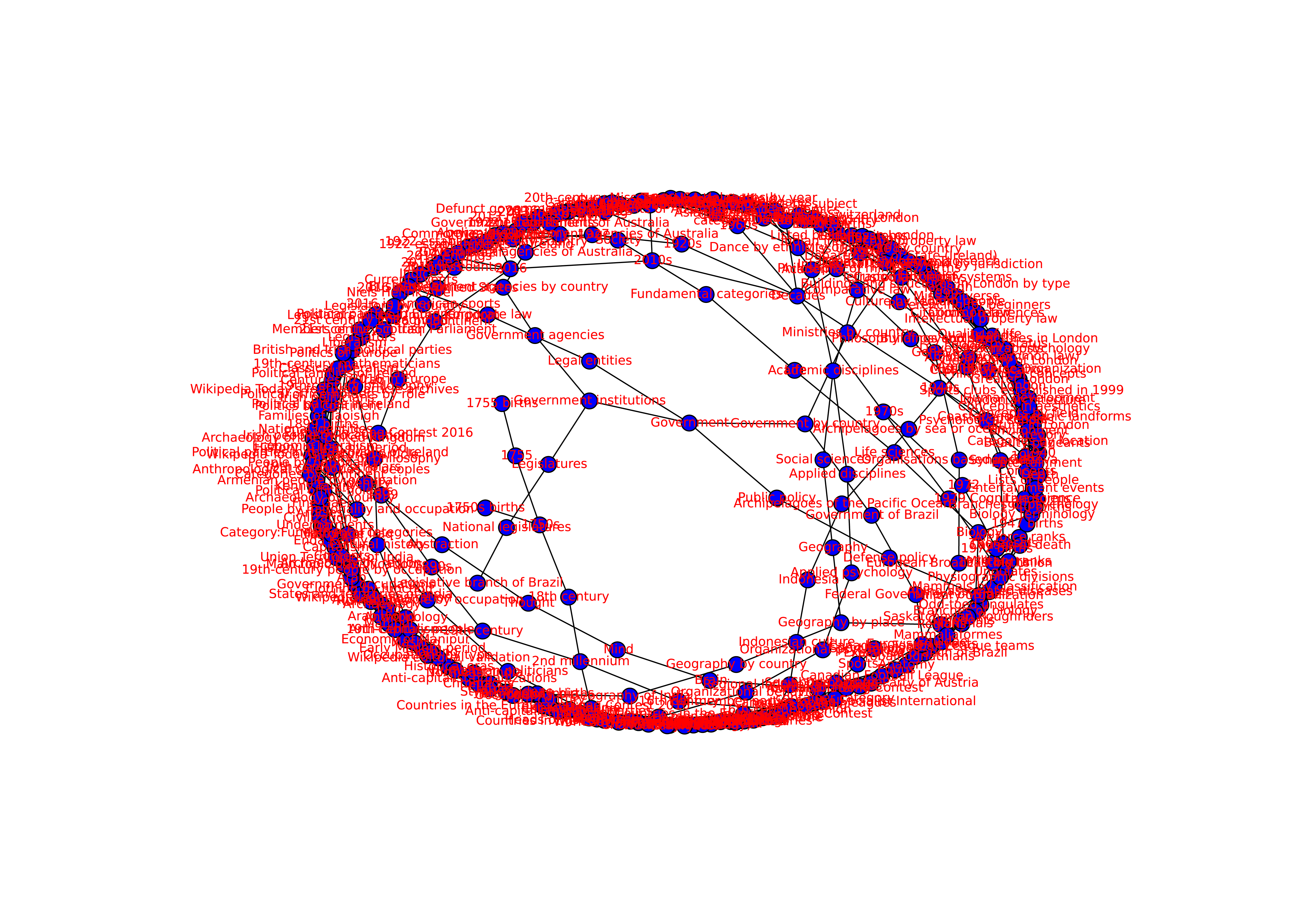

Each node of the network represents one webpage, and a connection from one node to another represents a hyperlink on one webpage, linking to the other.

Python Network Plotting Script

See here ▼

import sqlite3

from lxml import html

import requests

import networkx as nx

from pylab import *

conn = sqlite3.connect('wikiLinks.sqlite')

cur = conn.cursor()

cur.executescript('''

/*

Uncomment out the bottom section is want to reset the tables

*/

/*

DROP TABLE IF EXISTS Links;

DROP TABLE IF EXISTS Category;

CREATE TABLE Links (

id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,

link TEXT UNIQUE,

parent INTEGER,

topic INTEGER

);

CREATE TABLE Category (

id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,

topic TEXT UNIQUE

)

*/

''')

# Helper function to find link of given key in the html tree

def lookup(d, key):

found = False # want to find the tag after the key

for child in d.iter(): # iterate through html tree

if not found: # keep iterating unitl found is True

try:

if child.tag == 'a' and (key in child.text): # a finds the links

# print(child.text)

found = True

except: continue

else: # when found is True

if child.tag == 'a': # make sure it is a link

# print(child.text, child.attrib)

found = False

return child

G = nx.Graph() # Initialize graph

startpage = 40 # start index of database

testpages = 60 # How many links

for i in range(testpages): # iterate through links

cur.execute('SELECT link FROM Links WHERE id = ? ', (startpage + i, ))

try:

url = 'https://en.wikipedia.org' + cur.fetchone()[0] # database only contains latter part of link

page = requests.get(url) # go to URL

tree = html.fromstring(page.content) # get html tree

t1 = lookup(tree, 'Categories') # look for link after 'categories'

newtree = tree

categories = ['first'] # initialise category list

# while loop to find where catergory list should end

while t1.text != None and 'categor' not in categories[-1] and categories[-1] not in categories[:-2]:

t1 = lookup(newtree, 'Categories')

categories.append(t1.text)

newurl = 'https://en.wikipedia.org' + t1.get('href')

if categories[-2] != 'first':

G.add_edge(categories[-1], categories[-2]) # add to graph if not the first in the list

# print(t1.text)

newpage = requests.get(newurl)

newtree = html.fromstring(newpage.content)

# print(categories)

# update database

if len(categories) >= 2:

cur.execute('''INSERT OR IGNORE INTO Category (topic)

VALUES ( ? )''', ( categories[-2], ) )

cur.execute('SELECT id FROM Category WHERE topic = ? ', (categories[-2], ))

category_id = cur.fetchone()[0]

else:

category_id = 'Null'

cur.execute('''UPDATE Links SET topic = ?

WHERE id = ?''', (category_id, startpage + i) )

except: continue

conn.commit() # commit to database

# plot network plot

pos = nx.spring_layout(G)

nx.draw_networkx_nodes(G,pos,node_size=100,node_color='b')

nx.draw_networkx_edges(G,pos,width=1)

nx.draw_networkx_labels(G,pos,font_size=8,font_family='sans-serif',font_color='r')

savefig("wikiNetwork.png")

show()

The resulting network is then read from the sqlite database, plotted and saved to a png.

Conclusion

Though the web scraper works very well and the database of hyperlinks for webpages is populated very quickly, even for 60 links (plus all the webpages within each) the network graph is very crowded. Plotting the network on a map may not be the best way to visualize the interconnections between the webpages of wikipedia.

A better approach would be bin the webpages in categories and plot the correlations between webpage categories in a 2D histogram. Using a 2D histogram, although we lose the information of the name of the webpage, as the network plot shows, a much greater number of pages could be shown on the same graph. For each entry in the bins of the 2D histogram, would represent a hyperlink from one category (shown on the x-axis), to another catgory (shown on the y-axis). plot.ly have a number of good examples, and would make a good project for another time.